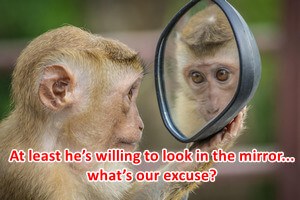

We humans hate looking in the mirror.

Time and again, we’d rather point fingers at the “new scary thing” than admit that the real monster has always been our own reflection.

Recently, I watched this video:

It features Ilya Sutskever (ex-OpenAI) and Eric Schmidt (ex-Google CEO) sharing their visions of the future with AI.

Their warnings sound dramatic… jobs disappearing, runaway superintelligence, societies unprepared for what’s ahead.

And I get why people latch onto that narrative.

Not a surprise: AI itself isn’t the real danger.

History Repeats Itself

The fear of machines taking over is nothing new.

During the Industrial Revolution, weaving machines were seen as the end of livelihoods.

Printing presses were condemned as dangerous.

Even electricity was viewed as destabilizing.

And yet, what happened?

Humanity adapted.

New roles emerged.

Productivity soared.

More importantly, the standard of living and overall quality of life improved dramatically.

We had safer homes, access to better goods, more time to pursue education, and opportunities that simply didn’t exist before.

Every era of disruption has been followed by an era of greater abundance… not because the technology was harmless, but because we figured out how to use it wisely.

The Real Monster

Here’s what we should actually be afraid of: unchecked human greed.

AI doesn’t “want” anything.

It’s not plotting to take over.

The real danger lies in who controls the infrastructure.

- Who owns the data pipelines?

- Who controls the compute power?

- Who writes the rules… or avoids them altogether?

In the wrong hands, AI isn’t a tool of innovation. It becomes a weapon.

Just like the discovery of the atom.

The atom gave us nuclear energy… a nearly limitless, cleaner source of power.

It also gave us nuclear weapons… the ability to destroy cities in seconds.

The atom itself wasn’t dangerous.

The danger was in what humans chose to do with it.

AI is no different.

Guardrails, Not Panic

Instead of panicking about AI as if it were a sentient overlord, we need to be demanding accountability:

- Transparent laws.

- Global guardrails.

- Policies that prevent AI from being captured and abused by the few.

The danger isn’t the circuits or the code.

It’s unchecked human ambition, amplified by AI.

Closing Thought

Ilya Sutskever is right about one thing: AI will be the greatest challenge humanity has faced.

But not because machines are out to replace us.

The true challenge will be choosing what kind of humans we are in the process.

Do we give in to greed, fear, and control?

Or do we build with responsibility, collaboration, and fairness?

The mirror is uncomfortable, but it’s the only place the real answers live.

Watch the video here:

Then ask yourself: Do you fear AI… or the people who want to own it?

Header Credit: Image by Victoria from Pixabay